After conquering the entire AI ecosystem with the H100 GPU, Nvidia unveils the H200: the new benchmark in graphics cards dedicated to artificial intelligence. Discover how this power bomb will take existing AIs like ChatGPT to the next level, while giving birth to new, even more advanced models!

Jadis, Nvidia was mainly known by gamers for its powerful graphics cards. In recent years, however, the American company has become one of the world’s masters.

With the cryptocurrency craze, for one thing, its GPUs were massively used for “mining”. Bitcoin and others. More recently, with the rise of generative AIits chips are now used to train models such as GPT.

And this is precisely what enabled Nvidia to join the exclusive club of companies capitalized at over a trillion dollars, because AI training requires many, many graphics cards.

GPUs, the lifeblood of AI

GPUs are ideal for AI applications, because they can perform many parallel matrix multiplicationsThese are essential for the operation of neural networks. Their role is essential for training and inferring AI models.

As Ian Buck, vice president of HPC at Nvidia, explains, “ to create intelligence with generative AI and HPC applications, from large volumes of data must be processed efficiently at high speed, using the large and fast memory of GPUs “.

Now, GAFAM and all the AI industry players like OpenAI and Anthropic are fighting it out a merciless war to monopolize so many GPUs as possible. So much so that a shortage is looming for the general public.

Until now, the Nvidia H100 launched in 2022 was considered to be the benchmark for AI-dedicated GPUs. Although originally designed for data centers, it was the most powerful card available.

Against this backdrop, on Monday November 13, 2023, the company announced a new new card that will shake up the established order: the H200.

The new champion of IA graphics cards

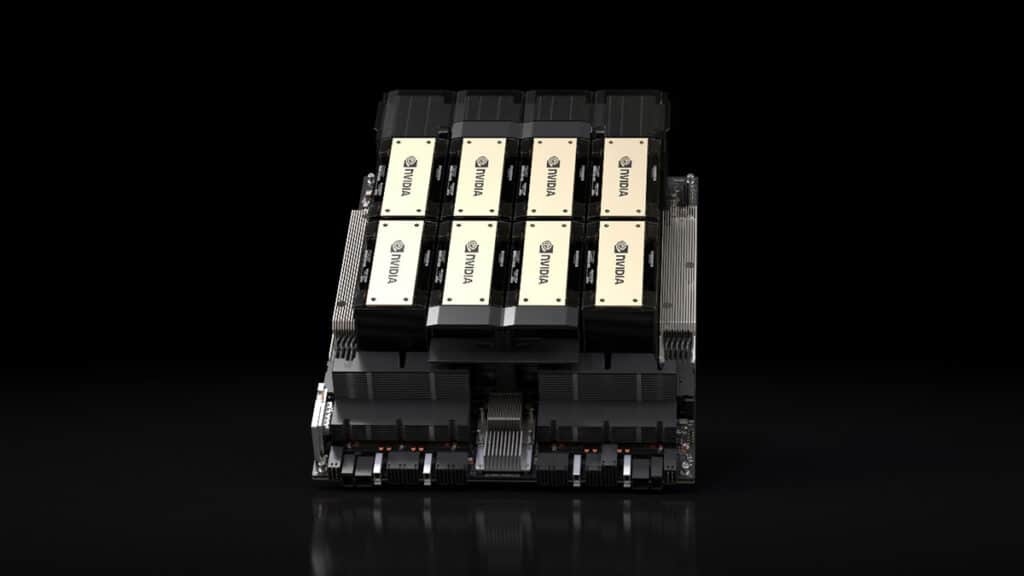

With its full name of HGX H200 Tensor Core, this new GPU uses Hopper architecture to accelerate AI applications. And it could enable the creation of even more powerful AI models.

In addition, thanks to this component, existing AIs such as ChatGPT could benefit from a greatly improved response time.

According to Nvidia, the H200 is quite simply the first GPU to feature HBM3e memory. This enables it to deliver 141GB of memory and 4.8 terabytes per second of bandwidth.

For comparison, this represents 2.4 times the bandwidth of the Nvidia A100 launched in 2020. Technology is evolving fast, very fast.

Power as a remedy for scarcity?

All the experts agree that the lack of power has been one of the main obstacles to to AI progress in 2023.

This gap has slowed the deployment of existing AI models and the development of new ones. The shortage of powerful AI GPUs is one of the main causes.

For example, OpenAI has often said that lack of GPU slows down ChatGPT. The company is forced to limit its chatbot in order to deliver the service.

One of the most obvious solutions, therefore, is to produce more chips, but creating more powerful chips also contributes to to solving the problem.

Thanks to H200, the AI models on which ChatGPT is based will be able to serve more customers simultaneously. This is why this GPU is likely to be very attractive to AI companies and cloud providers.

According to Ian Buck, “ with the Nvidia H200, the end-to-end AI platform that dominates the industry just got faster for solve some of the industry’s biggest challenges in the world “.

Price and launch date

The H200 will be available in several formats. The HGX H200 is a server board available in four-way or eight-way configurations.compatible with HGX H100 hardware and software.

For its part, the Nvidia GH200 Grace Hopper Superchip combines a CPU and GPU in a single package for even more AI power. These different options will meet every need.

The first H200-based instances will be deployed from 2024 Thereafter, the GPU will be available from all cloud service providers and system manufacturers. from the second quarter of 2024. The price has not yet been announced.